Awesome Safety-Critical AI

👋 Welcome to Awesome Safety-Critical AI - a curated space for practitioners, researchers and engineers committed to building intelligent systems that are as reliable as they are capable.

Inspired by systems thinking and engineering rigour, this project focuses on how to move from clever prototypes to fault-tolerant, production-grade ML systems.

Whether you’re working on autonomous vehicles, medical diagnosis, or airbone systems, this collection offers a blueprint for AI that can be trusted when it matters most.

AI in critical systems is not about polishing demos or chasing benchmarks. It’s about anticipating chaos - and designing for it.

This isn’t just another (awesome) list. It’s a call to action!

Table of Contents

- 🐇 Introduction

- 🌟 Editor’s Choice

- 🏃 TLDR

- 📝 Articles

- ✍️ Blogs / News

- 📚 Books

- 📜 Certifications

- 🎤 Conferences

- 👩🏫 Courses

- 📙 Guidelines

- 🤝 Initiatives

- 📋 Reports

- 🛣️ Roadmaps

- 📐 Standards

- 🛠️ Tools

- 📺 Videos

- 📄 Whitepapers

- 👷🏼 Working Groups

- 👾 Miscellaneous

- 🏁 Meta

- About Us

- Contributions

- Contributors

- Citation

🐇 Introduction

What is a critical system?

Critical systems are systems whose failure can lead to injury 🤕, loss of life ☠️, environmental harm 🌱🚱, infrastructure damage 🏗️💥, or mission failure 🎯.

| Application | Industry Vertical | Description | Failure Impact |

|---|---|---|---|

| Patient Monitoring | Healthcare | Tracks vital signs | Failure can delay life-saving interventions |

| Aircraft Navigation | Aerospace / Aviation | Ensures safe flight paths | Errors can lead to accidents |

| Power Grid Control | Energy | Manages electricity distribution | Failures can cause blackouts |

| Command & Control | Defence | Coordinates military actions | Failure risks national security |

| Industrial Automation Control | Manufacturing | Oversees production processes | Malfunction can cause damage or injury |

| Core Banking System | Finance | Handles transactions and account data | Downtime can affect financial operations |

These systems are expected to operate with exceptionally high levels of safety, reliability and availability, often under unclear and unpredictable conditions.

They’re the kind of systems we rarely think about… until something goes terribly wrong 🫣

| Incident | Year | Description | Root Cause | Industry Vertical | References |

|---|---|---|---|---|---|

| Therac-25 Radiation Overdose | 1985–1987 |

Radiation therapy machine gave fatal overdoses to multiple patients | Race conditions and lack of safety interlocks; poor error handling | Healthcare | Wikipedia, Stanford |

| Lufthansa Flight 2904 | 1993 |

Airbus A320 crashed during landing in Warsaw due to thrust reverser failure | Reversers disabled by software logic when gear compression conditions weren’t met | Aviation | Wikipedia, Simple Flying |

| Ariane Flight V88 | 1996 |

Ariane 5 rocket self-destructed seconds after launch | Unhandled overflow converting 64-bit float to 16-bit integer | Aerospace | Wikipedia, MIT |

| Mars Climate Orbiter | 1999 |

NASA probe lost due to trajectory miscalculation | Metric vs imperial unit mismatch between subsystems | Space Exploration | NASA |

| Patriot Missile Failure | 1991 |

Failed interception of Scud missile during Gulf War | Rounding error in floating-point time tracking caused significant drift | Defence | Barr Group, GAO |

| Knight Capital Loss | 2012 |

Trading system triggered erratic market orders causing massive financial loss | Deployment of obsolete test code; no safeguards for live operations | Finance / Trading | Henrico Dolfing, CNN |

| Toyota Unintended Acceleration | 2009–10 |

Reports of unexpected vehicle acceleration and crashes | Stack overflow and memory corruption in embedded ECU software | Automotive | SAE, Wikipedia |

| F-22 Raptor GPS Failure | 2007 |

Multiple jets lost navigation after crossing the International Date Line | Software couldn’t handle date transition; triggered reboot | Aerospace / Defence | FlightGlobal, Wikipedia |

| Heartbleed Bug | 2014 |

Security vulnerability in SSL exposed private server data | Improper bounds checking in the heartbeat extension of OpenSSL | Cybersecurity / IT | Heartbleed, CNET |

| T-Mobile Sidekick Data Loss | 2009 |

Users lost personal data during server migration | Software mishandling during data center transition led to irreversible loss | Telecom / Cloud Services | TechCrunch, PCWorld |

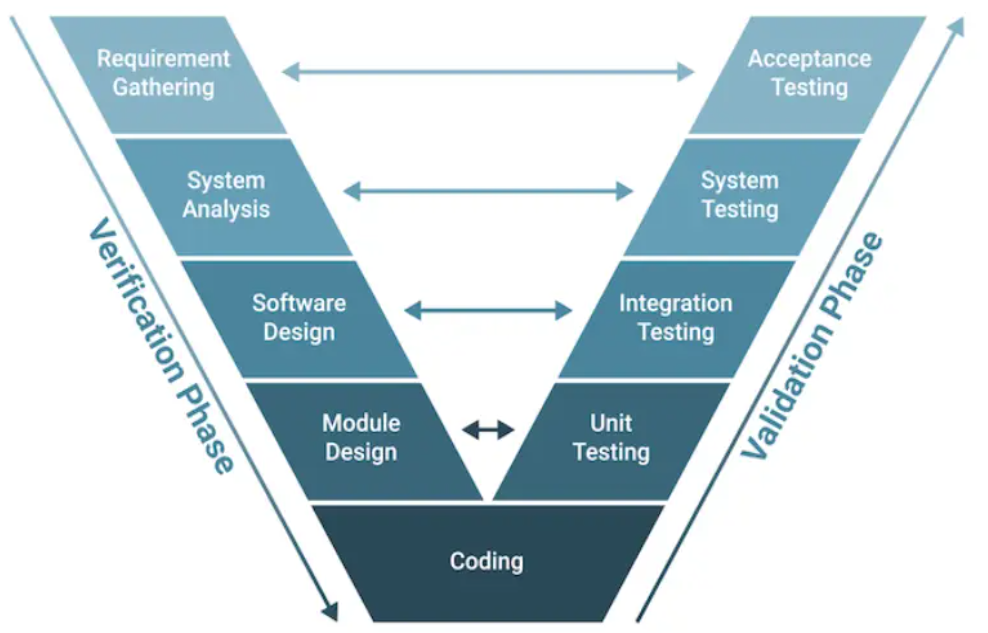

When the stakes are this high, conventional software engineering practices must be complemented by rigorous verification, validation and certification processes that are designed to ensure system integrity.

Critical systems don’t forgive shortcuts. Only engineering rigour stands between order and disaster.

TL;DR Critical systems are built on trust - and trust is built on rock-solid engineering.

AI in Critical Systems

So, where does that leave us? Is there room for AI in critical systems?

This isn’t just a theoretical question - we’re already well beyond the realm of hypotheticals.

From making life-or-death decisions in the ICU to controlling UAVs, performing surveillance and threat detection, and powering self-driving cars, intelligent systems aren’t just emerging in these these domains - they’re already fully operational.

| Use Case | Brief Description | Industry Domain | References |

|---|---|---|---|

| Predicting ICU Length of Stay | AI models use patient data to forecast ICU duration, improving resource allocation and care planning. | Healthcare | INFORMS, Springer |

| AI in Radiation Therapy Planning | Optimizes dose targeting using historical patient models, improving treatment precision and safety. | Healthcare | Siemens Healthineers |

| Self-Driving Cars | Powers perception, decision-making, and control systems for autonomous vehicles. | Transportation | Built In, Rapid Innovation |

| Autonomous Drone Navigation | Enables drones to navigate complex terrain without GPS; supports rescue and defense operations. | Aerospace / Defense | MDPI, Fly Eye |

| AI-Based Conflict Detection in ATC | Forecasts aircraft trajectories to alert controllers of potential collision risks. | Aerospace / Defense | Raven Aero, AviationFile |

| Remote Digital Towers for Airports | AI interprets visual data to assist air traffic controllers in low-visibility conditions. | Aerospace / Defense | Airways Magazine |

| Predictive Maintenance in Nuclear Reactors | Analyzes reactor sensor data to detect early failures, preventing major accidents. | Energy | Accelerant, IAEA |

| AI-Assisted Reactor Control Systems | Supports operators by modeling physical processes and recommending safety actions in real time. | Energy | Uatom.org, Springer |

| Autonomous Navigation for Cargo Ships | Enables real-time path planning to avoid obstacles and optimize maritime routes. | Transportation | MaritimeEd, ShipFinex |

| AI-Based Collision Avoidance at Sea | Detects and responds to high-risk vessel situations using visual and radar data fusion. | Transportation | Ship Universe |

| AI-Driven Fraud Detection | Identifies anomalous financial transactions and flags potential fraud in real time. | Financial Systems | Upskillist, Xenoss |

| AI for Compliance Monitoring | Uses NLP to parse documents and logs for regulatory breaches, supporting audits and governance. | Financial Systems | Digital Adoption, LeewayHertz |

| AI in Wildfire Early Detection | Processes satellite and sensor data to detect hotspots and alert emergency services. | Environmental Safety | NASA FireSense, PreventionWeb |

Building these systems is no walk in the park. ML brings powerful capabilities, but also adds layers of complexity and risk that need to be addressed through careful engineering.

While its ability to learn patterns and make data-driven decisions is unmatched in some domains, the adoption of AI in high-stakes environments must be tempered with caution, transparency, and a sharp understanding of its limitations.

Let’s briefly recap some of the most important…

1. Models can and will make mistakes

Better models may make fewer mistakes, but mistakes are generally unavoidable.

Mistakes are not a sign of poor engineering - they are an intrinsic feature of intelligence.

Working with AI means accepting this uncertainty and designing systems that can handle it gracefully.

2. Mistakes can be strange and unpredictable

AI doesn’t always fail in ways that make sense to us.

It might misclassify a stop sign with a sticker as a speed limit sign or switch treatment recommendations based on the user’s language.

Unlike traditional software, which follows explicit rules, AI learns from data and generalises.

Generalization allows models to make predictions beyond what they’ve seen so far, but it’s ultimately imperfect because the real world is messy, ever-changing, and rarely fits nicely into learned patterns.

3. Model outputs are often probabilitic

Traditional software is predictable: identical inputs yield identical outputs.

In contrast, ML models, especially those involving deep learning, can break this rule and exhibit probabilistic behavior.

Their outputs are a function not only of the input features, but also of things like model architecture, learned weights, training data distribution, hyperparameters (e.g. learning rate, batch size), optimization methods, and more.

That said, inference is often deterministic. Once trained, most models are capable of producing consistent outputs for a given input, assuming fixed weights and no funky runtime randomness.

This determinism means that systematic errors and biases are reproducible - models will consistently make the same mistakes.

Moreover, models trained on similar datasets often converge to similar representations, leading to shared failure modes and blind spots. So while ML systems may appear dynamic and random, their behavior can be quite predictable.

4. Data and models can change over time

Data and models are not static things. They’ll evolve continuously due to changes in the environment, user behavior, hardware, regulations and more.

Imagine you’re building a supervised learning system to detect early signs of pneumonia in chest X-rays.

Over time, several factors can cause both the data and the model to evolve:

-

Data Drift: the original training data may come from a specific hospital using a particular X-ray machine. As the system is deployed in new hospitals with different imaging equipment, patient demographics, or scanning protocols, the visual characteristics and quality of the X-ray images may vary significantly. This shift in the input distribution without an accompanying change in the task can reduce the model’s diagnostic accuracy. This kind of drift doesn’t actually crash the model, it just makes it quietly wrong.

-

Concept Drift: clinical knowledge and medical understanding can also evolve. For instance, new variants of respiratory diseases may present differently on X-rays, or diagnostic criteria for pneumonia may be updated. The relationship between image features and the correct diagnosis changes, requiring updates to the labeling process and model retraining.

-

Model Updates: the model is periodically retrained with new data to improve diagnostic performance or reduce false positives. These updates might involve changes in architecture, training objectives, or preprocessing steps. While performance may improve on average, these changes can introduce new failure modes and even regressions in certain edge cases. Model changes must be managed and monitored carefully, with rigorous testing and rollback plans.

-

External Factors: regulatory changes or clinical guidelines may require the model to provide additional outputs, such as severity scores or explainability maps. This requires collecting new types of annotations and modifying the model’s output structure.

In safety-critical domains like medical imaging, the evolution of data and models is inevitable. As such, systems must be designed with this in mind, embedding mechanisms for monitoring, validation, and traceability at every stage.

By proactively addressing data and model drift, automating model updates and defining processes for dealing with external influences, teams can ensure that AI systems remain not only accurate but also trustworthy, transparent, and robust over time.

5. Zero-error performance is expensive and often impossible

Here’s an uncomfortable truth: no AI system will ever be perfect.

No matter how sophisticated your architecture, how clean your data, or how rigorous your testing - your system will eventually encounter scenarios it can’t handle.

The pursuit of perfection isn’t just futile; it’s dangerous because it creates a false sense of security. Perfection is a mirage.

Instead of chasing the impossible, safety-critical AI demands a different mindset: assume failure and design for it.

This means embracing design principles that prioritize resilience, transparency, and human-centered control:

-

Graceful Degradation: When AI fails - and it will - what happens next? Does the system shut down safely, fall back to simpler heuristics, or alert human operators? The difference between a minor incident and a catastrophe often lies in how elegantly a system handles its own limitations.

-

Human-AI Collaboration: AI doesn’t have to carry the entire burden. The most reliable critical systems often combine AI capabilities with human oversight, creating multiple layers of validation and intervention. Think of AI as a highly capable assistant, not an infallible decision-maker.

-

Monitoring and Circuit Breakers: Just as electrical systems have circuit breakers to prevent dangerous overloads, AI systems need mechanisms to detect when they’re operating outside their safe boundaries. Confidence thresholds, anomaly detection, and performance monitoring aren’t nice-to-haves - they’re essential safety features.

-

Failure Mode Analysis: Traditional safety engineering asks

what could go wrong?and designs accordingly. AI systems demand the same rigor. What happens when your model encounters adversarial inputs, when data quality degrades, or when edge cases compound in unexpected ways?

The goal isn’t to eliminate failure - it’s to make failure safe, detectable, and recoverable. This isn’t just good engineering practice; it’s an architectural requirement that separates safe systems from disasters waiting to happen.

TL;DR When failure costs lives, AI must be engineered like a scalpel, not a sledgehammer.

The Bottom Line

The challenges we’ve outlined aren’t insurmountable obstacles; they’re design constraints that demand respect, discipline, and thoughtful engineering. Each limitation - from unpredictable failures to shifting data landscapes - represents an opportunity to build more robust, transparent, and trustworthy systems.

The question isn’t whether AI belongs in critical systems - it’s already there, making life-and-death decisions every day. The real question is: Are we developing these systems with the rigor they deserve?

This collection exists because we believe the answer must be an emphatic yes. It’s an open call to build AI systems that don’t just perform well in the lab, but earn trust where it matters most.

In critical systems, good enough isn’t good enough. The stakes are too high for anything less than our best engineering.

“Do you code with your loved ones in mind?”

― Emily Durie-Johnson, Strategies for Developing Safety-Critical Software in C++

🌟 Editor’s Choice

- If you’re just starting, here’s our recommended reading list:

- ML in Production by Christian Kästner // Chapters 2 (From Models to Systems) and 7 (Planning for Mistakes)

- Building Intelligent Systems by Geoff Hulten // Chapters 6 (Why Creating Intelligent Experiences is Hard), 7 (Balancing Intelligent Experiences) and 24 (Dealing with Mistakes)

- MJ’s The world and the machine, Kiri Wagstaff’s Machine Learning that Matters and Varshney’s Engineering Safety in Machine Learning

- 🧰 An awesome set of tools for production-ready ML

A word of caution ☝️ Use them wisely and remember that “a sword is only as good as the man [or woman] who wields it”

- 😈 A collection of scary use cases, incidents and failures of AI, which will hopefully raise awareness to its misuses

- 💳 The now-classic high-interest credit card of technical debt paper by Google

- 🤝 An introduction to trustworthy AI by NVIDIA

- 🚩 Lessons-learned from red teaming hundreds of generative AI products by Microsoft

- 🚨 Last but not least, the top 10 risks for LLM applications and Generative AI by OWASP

🏃 TLDR

If you’re in a hurry or just don’t like reading, here’s a podcast-style breakdown created with NotebookLM (courtesy of Pedro Nunes 🙏)

📝 Articles

- (Adedjouma et al., 2024) Engineering Dependable AI Systems

- (Amershi et al., 2019) Software Engineering for Machine Learning: A Case Study

- (Arpteg et al., 2018) Software Engineering Challenges of Deep Learning

- (Balduzzi et al.., 2021) Neural Network Based Runway Landing Guidance for General Aviation Autoland

- (Bach et al., 2024) Unpacking Human-AI Interaction in Safety-Critical Industries: A Systematic Literature Review

- (Balagopalan et al., 2024) Machine learning for healthcare that matters: Reorienting from technical novelty to equitable impact

- (Barman et al., 2024) The Brittleness of AI-Generated Image Watermarking Techniques: Examining Their Robustness Against Visual Paraphrasing Attacks

- (Becker et al., 2021) AI at work – Mitigating safety and discriminatory risk with technical standards

- (Belani, Vukovic & Car, 2019) Requirements Engineering Challenges in Building AI-Based Complex Systems

- (Bernardi, Mavridis & Estevez, 2019) 150 Successful Machine Learning Models: 6 Lessons Learned at Booking.com

- (Beyers et al., 2019) Quantification of the Impact of Random Hardware Faults on Safety-Critical AI Applications: CNN-Based Traffic Sign Recognition Case Study

- (Bharadwaj, 2022) Assuring autonomous operations in aviation: is use of AI a good idea?

- (Bloomfield et al., 2021) Safety Case Templates for Autonomous Systems

- (Bojarski et al., 2016) End to End Learning for Self-Driving Cars

- (Bolchini, Cassano & Miele, 2024) Resilience of Deep Learning applications: a systematic literature review of analysis and hardening techniques

- (Bondar, 2025) Ukraine’s Future Vision and Current Capabilities for Waging AI-Enabled Autonomous Warfare

- (Bloomfield & Rushby, 2025) Where AI Assurance Might Go Wrong: Initial lessons from engineering of critical systems

- (Breck et al., 2016) What’s your ML test score? A rubric for ML production systems

- (Breiman, 2001) Statistical Modeling: The Two Cultures

- (Bullwinkel et al., 2025) Lessons From Red Teaming 100 Generative AI Products

- (Burton & Herd, 2023) Addressing uncertainty in the safety assurance of machine-learning

- (Chance et al., 2023) Assessing Trustworthiness of Autonomous Systems

- (Chihani, 2021) Formal Methods for AI: Lessons from the past, promisses of the future

- (Clavière, 2023) Safety verification of neural network based systems using formal methods

- (Clavière, Kirov & Cofer, 2025) How to Verify Generalization Capability of a Neural Network with Formal Methods

- (Clement et al., 2023) Process Assurance for Object Detection Through Deep Neural Networks to Accomplish the Autonomous Aerial Refueling Task

- (Cummings, 2021) Rethinking the Maturity of Artificial Intelligence in Safety-Critical Settings

- (Dalrymple et al., 2025) Towards Guaranteed Safe AI: A Framework for Ensuring Robust and Reliable AI Systems

- (Delseny et al., 2021) White Paper Machine Learning in Certified Systems

- (Demir, Moslem & Duleba, 2024) Artificial Intelligence in Aviation Safety: Systematic Review and Biometric Analysis

- (Dmitriev, Schumann & Holzapfel, 2022) Toward Certification of Machine-Learning Systems for Low Criticality Airborne Applications

- (Dmitriev et al., 2023) Runway Sign Classifier: A DAL C Certifiable Machine Learning System

- (Dmitriev et al., 2024) Safety assessment of a machine learning-based aircraft emergency braking system: A case study

- (Dragan & Srinivasa, 2013) A policy-blending formalism for shared control

- (Dutta et al., 2017) Output range analysis for deep feedforward neural networks

- (Endres et al., 2023) Can Large Language Models Transform Natural Language Intent into Formal Method Postconditions?

- (Farahmand & Neu, 2025) AI Safety for Physical Infrastructures: A Collaborative and Interdisciplinary Approach

- (Faria, 2018) Machine learning safety: An overview

- (Feather & Pinto, 2023) Assurance for Autonomy – JPL’s past research, lessons learned, and future directions

- (Gauerhof, Munk & Burton, 2018) Structuring validation targets of a machine learning function applied to automated driving

- (Gebru et al., 2018) Datasheets for Datasets

- (Gehr et al., 2018) AI2: Safety and Robustness Certification of Neural Networks with Abstract Interpretation

- (Guldimann et al., 2024) COMPL-AI Framework: A Technical Interpretation and LLM Benchmarking Suite for the EU Artificial Intelligence Act

- (Gursel et al., 2025) The role of AI in detecting and mitigating human errors in safety-critical industries: A review

- (Habli, Lawton & Porter, 2020) Artificial intelligence in health care: accountability and safety

- (Haroun et al., 2023) Machine learning requirements for the airworthiness of structural health monitoring systems in aircraft

- (Hasani et al., 2022) Trustworthy Artificial Intelligence in Medical Imaging

- (Hennigen et al., 2023) Towards Verifiable Text Generation with Symbolic References

- (Holzinger et al., 2017) What do we need to build explainable AI systems for the medical domain?

- (Höhndorf et al., 2024) Artificial Intelligence Verification Based on Operational Design Domain (ODD) Characterizations Utilizing Subset Simulation

- (Hopkins & Booth, 2021) Machine Learning Practices Outside Big Tech: How Resource Constraints Challenge Responsible Development

- (Hou & Sun, 2025) A Hybrid Deep Learning Architecture for Enhanced Vertical Wind and FBAR Estimation in Airborne Radar Systems

- (Houben et al., 2022) Inspect, Understand, Overcome: A Survey of Practical Methods for AI Safety

- (Jackson, 1995) The world and the machine

- (Jamakatel et al., 2024) A Goal-Directed Dialogue System for Assistance in Safety-Critical Application

- (Jaß & Thomas, 2025) Using N-Version Architectures for Railway Segmentation with Deep Neural Networks

- (Johnson, 2018) The Increasing Risks of Risk Assessment: On the Rise of Artificial Intelligence and Non-Determinism in Safety-Critical Systems

- (Jovanovic et al., 2022) Private and Reliable Neural Network Inference

- (Kaakai et al., 2022) Toward a Machine Learning Development Lifecycle for Product Certification and Approval in Aviation

- (Kaakai et al., 2023) Data-Centric Operational Design Domain Characterization for Machine Learning-Based Aeronautical Products

- (Kapoor & Narayanan, 2023) Leakage and the Reproducibility Crisis in ML-based Science

- (Khattak et al., 2024) AI-supported estimation of safety critical wind shear-induced aircraft go-around events utilizing pilot reports

- (Kiseleva et al., 2025) The EU AI Act, Stakeholder Needs, and Explainable AI: Aligning Regulatory Compliance in a Clinical Decision Support System

- (Kuwajima, Yasuoka & Nakae, 2020) Engineering problems in machine learning systems

- (Lacasa et al., 2025) Towards certification: A complete statistical validation pipeline for supervised learning in industry

- (Leike et al., 2017) AI Safety Gridworlds

- (Leofante et al., 2018) Automated Verification of Neural Networks: Advances, Challenges and Perspectives

- (Lesage et al., 2025) Challenges of neural network accelerators for aeronautics—position paper

- (Leyli-Abadi et al., 2025) A Conceptual Framework for AI-based Decision Systems in Critical Infrastructures

- (Li et al., 2022) Trustworthy AI: From Principles to Practices

- (Li et al., 2024) Formal-LLM: Integrating Formal Language and Natural Language for Controllable LLM-based Agents

- (Lones, 2021) How to avoid machine learning pitfalls: a guide for academic researchers

- (Lubana, 2024) Understanding and Identifying Challenges in Design of Safety-Critical AI Systems

- (Luckcuck et al., 2019) Formal Specification and Verification of Autonomous Robotic Systems: A Survey

- (Lwakatare et al., 2020) Large-scale machine learning systems in real-world industrial settings: A review of challenges and solutions

- (Macher et al., 2021) Architectural Patterns for Integrating AI Technology into Safety-Critical System

- (Machida, 2019) N-Version Machine Learning Models for Safety Critical Systems

- (Mariani et al., 2023) Trustworthy AI - Part I, II and III

- (Mattioli et al., 2023) AI Engineering to Deploy Reliable AI in Industry

- (Meyers, Löfstedt & Elmroth, 2023) Safety-critical computer vision: an empirical survey of adversarial evasion attacks and defenses on computer vision systems

- (Mir & Perinpanayagam, 2022) Certification of machine learning algorithms for safe-life assessment of landing gear

- (Mitchell et al., 2019) Model cards for model reporting

- (Nahar et al., 2022) Collaboration challenges in building ML-enabled systems: communication, documentation, engineering, and process

- (Ozkaya, 2020) What Is Really Different in Engineering AI-Enabled Systems?

- (Papernot et al., 2018) SoK: Security and Privacy in Machine Learning

- (Passi & Sengers, 2020) Making data science systems work

- (Patel et al., 2025) Towards Secure MLOps: Surveying Attacks, Mitigation Strategies, and Research Challenges

- (Pattabiraman, Li & Chen, 2020) Error Resilient Machine Learning for Safety-Critical Systems: Position Paper

- (Pereira & Thomas, 2024) Challenges of Machine Learning Applied to Safety-Critical Cyber-Physical Systems

- (Perez-Cerrolaza et al., 2024) Artificial Intelligence for Safety-Critical Systems in Industrial and Transportation Domains: A Survey

- (Phelps & Ranson, 2023) Of Models and Tin Men: A Behavioural Economics Study of Principal-Agent Problems in AI Alignment using Large-Language Models

- (Picardi et al., 2020) Assurance Argument Patterns and Processes for Machine Learning in Safety-Related Systems

- (Pushkarna, Zaldivar & Kjartansson, 2022) Data Cards: Purposeful and Transparent Dataset Documentation for Responsible AI

- (Raj, 2025) Model-Based Approaches in Safety-Critical Embedded System Design

- (Rajagede et al., 2025) NAPER: Fault Protection for Real-Time Resource-Constrained Deep Neural Networks

- (Ramos et al., 2024) Collaborative Intelligence for Safety-Critical Industries: A Literature Review

- (Reuel et al., 2024) Open Problems in Technical AI Governance

- (Ribeiro, Singh & Guestrin, 2016) “Why Should I Trust You?”: Explaining the Predictions of Any Classifier

- (Sambasivan, 2021) “Everyone wants to do the model work, not the data work”: Data Cascades in High-Stakes AI

- (Schulhoff et al., 2025) Ignore This Title and HackAPrompt: Exposing Systemic Vulnerabilities of LLMs through a Global Scale Prompt Hacking Competition

- (Schulhoff et al., 2024) The Prompt Report: A Systematic Survey of Prompt Engineering Techniques

- (Sculley et al., 2011) Detecting adversarial advertisements in the wild

- (Sculley et al., 2015) Hidden Technical Debt in Machine Learning Systems

- (Sendak et al., 2020) Real-World Integration of a Sepsis Deep Learning Technology Into Routine Clinical Care: Implementation Study

- (Seshia, Sadigh & Sastry, 2020) Towards Verified Artificial Intelligence

- (Shalev-Schwartz, Shammah & Shashua, 2017) On a Formal Model of Safe and Scalable Self-driving Cars

- (Sharif et al., 2016) Accessorize to a Crime: Real and Stealthy Attacks on State-of-the-Art Face Recognition

- (Shaw & Zhu, 2022) Can Software Engineering Harness the Benefits of Advanced AI?

- (Shuaia et al., 2024) Advances in Assuring Artificial Intelligence and Machine Learning Development Lifecycle and Their Applications in Aviation

- (Sinha et al., 2020) Neural Bridge Sampling for Evaluating Safety-Critical Autonomous Systems

- (Sousa, Moutinho & Almeida, 2020) Expert-in-the-loop Systems Towards Safety-critical Machine Learning Technology in Wildfire Intelligence

- (Sprockhoff et al., 2024) Model-Based Systems Engineering for AI-Based Systems

- (Stoica et al., 2017) A Berkeley View of Systems Challenges for AI

- (Strubell, Ganesh & McCallum, 2019) Energy and Policy Considerations for Deep Learning in NLP

- (Tambon et al., 2021) How to Certify Machine Learning Based Safety-critical Systems? A Systematic Literature Review

- (Torens et al., 2024) From Operational Design Domain to Runtime Monitoring of AI-based Aviation Systems

- (Urban & Miné, 2021) A Review of Formal Methods applied to Machine Learning

- (Uuk et al., 2025) Effective Mitigations for Systemic Risks from General-Purpose AI

- (Valot et al., 2025) Implementation of airborne ML models with semantics preservation

- (Varshney, 2016) Engineering Safety in Machine Learning

- (Wagstaff, 2012) Machine Learning that Matters

- (Wang & Chung, 2021) Artificial intelligence in safety-critical systems: a systematic review

- (Webster et al., 2019) A corroborative approach to verification and validation of human-robot teams

- (Wei et al., 2022) On the Safety of Interpretable Machine Learning: A Maximum Deviation Approach

- (Weiding et al.. 2024) Holistic Safety and Responsibility Evaluations of Advanced AI Models

- (Wen & Machida, 2025) Reliability modeling for three-version machine learning systems through Bayesian networks

- (Wiggerthale & Reich, 2024) Explainable Machine Learning in Critical Decision Systems: Ensuring Safe Application and Correctness

- (Williams & Yampolskiy, 2021) Understanding and Avoiding AI Failures: A Practical Guide

- (Woodburn, 2021) Machine Learning and Software Product Assurance: Bridging the Gap

- (Xie et al., 2020) DeepHunter: a coverage-guided fuzz testing framework for deep neural networks

- (Yang, 2017) The Role of Design in Creating Machine-Learning-Enhanced User Experience

- (Yu et al., 2024) A Survey on Failure Analysis and Fault Injection in AI Systems

- (Zhang et al., 2018) Efficient Neural Network Robustness Certification with General Activation Functions

- (Zhang & Li, 2020) Testing and verification of neural-network-based safety-critical control software: A systematic literature review

- (Zhang et al., 2020) Machine Learning Testing: Survey, Landscapes and Horizons

- (Zhang et al., 2024) The Fusion of Large Language Models and Formal Methods for Trustworthy AI Agents: A Roadmap

✍️ Blogs / News

- (Acubed, 2023) Airbus Validates Computer Vision-Based Technologies to Increase Safety Through Automation

- (Amazon Science, 2020) How to integrate formal proofs into software development

- (Beca, 2025) Can Machine Learning Systems be Certified on Aircraft?

- (Bits & Atoms, 2017) Designing Effective Policies for Safety-Critical AI

- (Bits & Chips, 2024) Verifying and validating AI in safety-critical systems

- (Clear Prop, 2023) Unpacking Human-AI Interaction in Safety-Critical Industries: A Systematic Literature Review

- (CleverHans Lab, 2016) Breaking things is easy

- (DeepMind, 2018) Building safe artificial intelligence: specification, robustness, and assurance

- (Doing AI Governance, 2025) AI Governance Mega-map: Safe, Responsible AI and System, Data & Model Lifecycle

- (EETimes, 2023) Can We Trust AI in Safety Critical Systems?

- (Embedded, 2024) The impact of AI/ML on qualifying safety-critical software

- (Forbes, 2022) Part 2: Reflections On AI (Historical Safety Critical Systems)

- (Gartner, 2021) Gartner Identifies the Top Strategic Technology Trends for 2021

- (Ground Truths, 2025) When Doctors With AI Are Outperformed by AI Alone

- (Homeland Security, 2022) Artificial Intelligence, Critical Systems, and the Control Problem

- (Kubiya, 2025a) Deterministic AI vs Generative AI: A Developer’s Perspective

- (Kubiya, 2025b) What is Deterministic AI: Concepts, Benefits, and Its Role in Building Reliable AI Agents (2025 Guide)

- (Kubiya, 2025c) Top 5 Challenges in Achieving Deterministic AI and How to Solve Them

- (Lakera, 2025) AI Red Teaming: Securing Unpredictable Systems

- (Learn Prompting, 2025) What is AI Red Teaming?

- (Lynx, 2023) How is AI being used in Aviation?

- (MathWorks, 2023) The Road to AI Certification: The importance of Verification and Validation in AI

- (Pivot to AI, 2025) Vibe nuclear — let’s use AI shortcuts on reactor safety!

- (Perforce, 2019) Why SOTIF (ISO/PAS 21448) Is Key For Safety in Autonomous Driving

- (Protect AI, 2025) The Expanding Role of Red Teaming in Defending AI Systems

- (restack, 2025) Safety In Critical AI Systems

- (Safety4Sea, 2024) The risks and benefits of AI translations in safety-critical industries

- (SE4ML, 2025) Machine Learning Engineering Practices in Recent Years: Trends and Challenges

- (Space and Time, 2024) Verifiable LLMs for the Modern Enterprise

- (Susana Cox, 2025) We Need To Talk About Real Engineering

- (Taranis, 2025) Datacenters in space are a terrible, horrible, no good idea

- (think AI, 2024) Artificial Intelligence in Safety-Critical Systems

- (Thinking Machines, 2025) Defeating Nondeterminism in LLM Inference

- (VentureBeat, 2019) Why do 87% of data science projects never make it into production?

- (Wiz, 2025) What is AI Red Teaming?

📚 Books

- (Barocas, Hardt & Narayanan, 2023) Fairness and Machine Learning

- (Bass et al., 2025) Engineering AI Systems: Architecture and DevOps Essentials

- (Chen et al., 2022) Reliable Machine Learning: Applying SRE Principles to ML in Production

- (Christian, 2020) The Alignment Problem: Machine Learning and Human Values

- (Crowe et al., 2024) Machine Learning Production Systems: Engineering Machine Learning Models and Pipelines

- (Dix, 2025) Artificial Intelligence: Humans at the Heart of Algorithms

- (Hall, Curtis & Pandey, 2023) Machine Learning for High-Risk Applications: Approaches to Responsible AI

- (Hopgood, 2021) Intelligent Systems for Engineers and Scientists: A Practical Guide to Artificial Intelligence

- (Huang, Jin & Ruan, 2023) Machine Learning Safety

- (Hulten, 2018) Building Intelligent Systems: A Guide to Machine Learning Engineering

- (Huyen, 2022) Designing Machine Learning Systems: An Iterative Process for Production-Ready Applications

- (Jackson, Thomas & Millett, 2007) Software for Dependable Systems: Sufficient Evidence?

- (Joseph et al., 2019) Adversarial Machine Learning

- (Kastner, 2025) Machine Learning in Production: From Models to Products

- (Koopman, 2025) Embodied AI Safety: Reimagining safety engineering for artificial intelligence in physical systems

- (Levenson, 1995) Safeware: System Safety and Computers

- (Martin, 2025) We, Programmers: A Chronicle of Coders from Ada to AI

- (Molnar, 2025) Interpretable Machine Learning: A Guide for Making Black Box Models Explainable

- (Pelillo & Scantamburlo, 2021) Machines We Trust: Perspectives on Dependable AI

- (Razmi, 2024) AI Doctor: The Rise of Artificial Intelligence in Healthcare - A Guide for Users, Buyers, Builders, and Investors

- (Spector et al., 2022) Data Science in Context: Foundations, Challenges, Opportunities

- (Suen, Scheinker & Enns, 2022) Artificial Intelligence for Healthcare: Interdisciplinary Partnerships for Analytics-driven Improvements in a Post-COVID World

- (Topol, 2019) Deep Medicine: How Artificial Intelligence Can Make Healthcare Human Again

- (Tran, 2024) Artificial Intelligence for Safety and Reliability Engineering: Methods, Applications, and Challenges

- (Rierson, 2013) Developing Safety-Critical Software: A Practical Guide for Aviation Software and DO-178C Compliance

- (Varshney, 2021) Trust in Machine Learning

- (Visengeriyeva, 2025) The AI Engineer’s Guide to Surviving the EU AI Act: Navigating the EU Regulatory Requirements

- (Yampolskiy, 2018) Artificial Intelligence Safety and Security

📜 Certifications

- (ISTQB) Certified Tester AI Testing (CT-AI)

- (USAII) Certified AI Scientist (CAIS)

🎤 Conferences

- (EDCC2025) 20th European Dependable Computing Conference

- (ELLIS) Robust ML Workshop 2024

- (HAI) Workshop on Sociotechnical AI Safety

- (IJCAI-24) AI for Critical Infrastructure

- (KDD2023) Trustworthy machine learning

- (MITRE) FAA Artificial Intelligence Safety Assurance: Roadmap and Technical Exchange Meetings

- (NFM-AI-Safety-20) NFM Workshop on AI Safety

- (MLOps Community) AI in Production 2024

- (MLOps Community) LLMs in Production 2023

- (Robust Intelligence) ML:Integrity 2022

- (SCSC 2025) Safety Critical Systems Symposium SSS’25

- (SGAC 2023) South Wales Safety Groups Alliance Conference and Exhibition

- (SSS’24) 32nd annual Safety-Critical Systems Symposium

- (WMC’2024) Workshop on Machine-learning enabled safety-Critical systems

👩🏫 Courses

- AI for Good Specialization @ DeepLearning.AI

- AI for Social Good @ Stanford

- AI Red Teaming @ Microsoft

- Dependable AI Systems @ University of Illinois Urbana-Champaign

- Ethics and policy in data science @ Cornell

- Ethics of Computing @ Princeton

- Fairness in machine learning @ Berkeley

- Fairness in machine learning @ Princeton

- Introduction to AI Governance @ Stanford

- Introduction to AI Safety @ Stanford

- Limits to Prediction @ Princeton

- Machine Learning for Healthcare @ MIT

- Machine Learning in Production @ Carnegie-Mellon

- Machine Learning Security @ Oregon State

- Real-Time Mission-Critical Systems Design @ University of Colorado Boulder / Coursera

- Reliable and Interpretable Artificial Intelligence @ ETH Zürich

- Responsible AI @ Amazon MLU

- Robustness in Machine Learning @ University of Washington

- Safe and Interactive Robotics @ Stanford

- Safety Critical Systems @ IET

- Safety Critical Systems @ Oxford

- Security and Privacy of Machine Learning @ University of Virginia

- Trustworthy Artificial Intelligence @ University of Michigan, Dearborn

- Trustworthy Machine Learning @ Oregon State

- Trustworthy Machine Learning @ University of Tübingen

- Validation of Safety Critical Systems @ Stanford

📙 Guidelines

- (APT Research) Artificial Intelligence/Machine Learning System Safety

- (CAIDP) Universal Guidelines for AI

- (CISA) AI Data Security: Best Practices for Securing Data Used to Train and Operate AI Systems

- (DIU) Reponsible AI Guidelines

- (ESA) ECSS-E-HB-40-02A – Machine learning handbook

- (European Commission) Ethics guidelines for trustworthy AI

- (European Union) The EU AI Act

- (FDA) Good Machine Learning Practice for Medical Device Development: Guiding Principles

- (Google) AI Principles

- (Google) SAIF // Secure AI Framework: A practitioner’s guide to navigating AI security

- (Harvard University) Initial guidelines for the use of Generative AI tools at Harvard

- (Homeland Security) Roles and Responsibilities Framework for Artificial Intelligence in Critical Infrastructure

- (Homeland Security) Safety and Security Guidelines for Critical Infrastructure Owners and Operators

- (Inter-Parliamentary Union) Guidelines for AI in Parliaments

- (Microsoft) Responsible AI: Principles and Approach

- (Ministry of Defense) JSP 936: Dependable Artificial Intelligence (AI) in defense (part 1: directive)

- (NCSC) Guidelines for secure AI system development

- (OECD) AI Principles

- (Stanford) Responsible AI at Stanford

🤝 Initiatives

- (DARPA) AIQ: Artificial Intelligence Quantified

- (Data, Responsible) Foundations of responsible data management

- (DEEL) Dependable, Certifiable & Explainable Artificial Intelligence for Critical Systems

- (DSG) Dependable Systems Group

- (FUTURE-AI) Best practices for trustworthy AI in medicine

- (IRT Saint Exupéry) AI for Critical Systems Competence Center

- (ITU) AI for Good

- (Partnership on AI) Safety Critical AI

- (SAFE) Stanford Center for AI Safety

- (SAFEXPLAIN) Safe and Explainable Critical Embedded Systems based on AI

- (SAIL) Systems for Artificial Intelligence Lab

- (SCSC) Safety Critical Systems Club

- (SISL) Stanford Intelligent Systems Laboratory

- (SRILAB) Safe Artificial Intelligence

- (SustainML) Sustainable Machine Learning

- Center for Responsible AI

- Future of Life Institute

- Responsible AI Institute

- WASP WARA Public Safety

🛣️ Roadmaps

- (CISA) Roadmap for Artificial Intelligence: a whole-of-agency plan aligned with national AI strategy

- (EASA) Artificial Intelligence Roadmap: a human-centric approach to AI in aviation

- (FAA) Roadmap for Artificial Intelligence Safety Assurance

- (RAILS) Roadmaps for AI Integration in the Rail Sector

📋 Reports

- (AI Now Institute) Fission for Algorithms: The Undermining of Nuclear Regulation in Service of AI

- (Air Street Capital) State of AI Report 2024

- (CLTC) The Flight to Safety-Critical AI: Lessons in AI Safety from the Aviation Industry

- (EASA, 2021) First usable guidance for Level 1 machine learning applications

- (EASA, 2023) Formal Methods use for Learning Assurance (ForMuLA)

- (EASA & Daedalean, 2024) Concepts of Design Assurance for Neural Networks (CoDANN)

- (FLI) AI Safety Index 2024

- (Google) Responsible AI Progress Report 2025

- (Gov.UK) International AI Safety Report 2025

- (LangChain) State of AI Agents

- (McKinsey) Superagency in the workplace: Empowering people to unlock AI’s full potential

- (Microsoft) Responsible AI Transparency Report 2024

- (NASA) Examining Proposed Uses of LLMs to Produce or Assess Assurance Arguments

- (PwC) US Responsible AI Survey

- (rosap) Assurance of Machine Learning-Based Aerospace Systems: Towards an Overarching Properties-Driven Approach

📐 Standards

Generic

- ANSI/UL 4600 > Standard for Evaluation of Autonomous Products

- IEEE 7009-2024 > IEEE Standard for Fail-Safe Design of Autonomous and Semi-Autonomous Systems

- ISO/IEC 23053:2022 > Framework for Artificial Intelligence (AI) Systems Using Machine Learning (ML)

- ISO/IEC 23894:2023 > Information technology — Artificial intelligence — Guidance on risk management

- ISO/IEC 38507:2022 > Information technology — Governance of IT — Governance implications of the use of artificial intelligence by organizations

- ISO/IEC 42001:2023 > Information technology — Artificial intelligence — Management system

- ISO/IEC JTC 1/SC 42 > Artificial intelligence

- NIST AI 100-1 > Artificial Intelligence Risk Management Framework

- SAE G-34 > Artificial Intelligence in Aviation

Coding

AUTOSAR: guidelines for the use of the C++14 language in critical and safety-related systemsBARR-C:2018: embedded C Coding standard- ESCR Embedded System development Coding Reference Guide

HIC++: High Integrity C++ coding standard v4.0JSF AV C++: Joint Strike Fighter Air Vehicle C++ Coding StandardsJPL C: JPL Institutional Coding Standard for the C programming languageMISRA-C/2004: Guidelines for the use of the C language in critical systemsMISRA-C/2012: Guidelines for the use of the C language in critical systemsMISRA-C++/2008: Guidelines for the use of the C++ language in critical systems- Rules for secure C software development: ANSSI guideline

SEI CERT: Rules for Developing Safe, Reliable, and Secure Systems

🛠️ Tools

Adversarial Attacks

bethgelab/foolbox: fast adversarial attacks to benchmark the robustness of ML models in PyTorch, TensorFlow and JAXTrusted-AI/adversarial-robustness-toolbox: a Python library for ML security - evasion, poisoning, extraction, inference - red and blue teams

Data Management

cleanlab/cleanlab: data-centric AI package for data quality and ML with messy, real-world data and labels.facebook/Ax: an accessible, general-purpose platform for understanding, managing, deploying, and automating adaptive experimentsgreat-expectations/great_expectations: always know what to expect from your dataiterative/dvc: a command line tool and VS Code Extension to help you develop reproducible ML projectspydantic/pydantic: data validation using Python type hintstensorflow/data-validation: a library for exploring and validating ML dataunionai-oss/pandera: data validation for scientists, engineers, and analysts seeking correctness

Model Evaluation

confident-ai/deepeval: a simple-to-use, open-source LLM evaluation framework, for evaluating and testing LLM systemsRobustBench/robustbench: a standardized adversarial robustness benchmarktrust-ai/SafeBench: a benchmark for evaluating Autonomous Vehicles in safety-critical scenarios

Model Fairness & Privacy

Dstack-TEE/dstack: TEE framework for private AI model deployment with hardware-level isolation using Intel TDX and NVIDIA Confidential Computingfairlearn/fairlearn: a Python package to assess and improve fairness of ML modelspytorch/opacus: a library that enables training PyTorch models with differential privacytensorflow/privacy: a library for training ML models with privacy for training datazama-ai/concrete-ml: a Privacy-Preserving Machine Learning (PPML) open-source set of tools built on top of Concrete by Zama

Model Intepretability

MAIF/shapash: user-friendly explainability and interpretability to develop reliable and transparent ML modelspytorch/captum: a model interpretability and understanding library for PyTorchSeldonIO/alibi: a library aimed at ML model inspection and interpretation

Model Lifecycle

aimhubio/aim: an easy-to-use and supercharged open-source experiment trackercomet-ml/opik: an open-source platform for evaluating, testing and monitoring LLM applicationsevidentlyai/evidently: an open-source ML and LLM observability frameworkIDSIA/sacred: a tool to help you configure, organize, log and reproduce experimentsmlflow/mlflow: an open-source platform for the ML lifecyclewandb/wandfb: a fully-featured AI developer platform

Model Security

azure/PyRIT: risk identification tool to assess the security and safety issues of generative AI systemsffhibnese/Model-Inversion-Attack-ToolBox: a comprehensive toolbox for model inversion attacks and defensesnvidia/garak: Generative AI red-teaming and assessment kitprotectai/llm-guard: a comprehensive tool designed to fortify the security of LLMs

Model Testing & Validation

deepchecks/deepchecks: an open-source package for validating ML models and dataexplodinggradients/ragas: objective metrics, intelligent test generation, and data-driven insights for LLM appspytorchfi/pytorchfi: a runtime fault injection tool for PyTorch 🔥

Oldies 🕰️

pralab/secml: Python library for the security evaluation of Machine Learning algorithms

Bleeding Edge ⚗️

Just a quick note 📌 This section includes some promising, open-source tools we’re currently testing and evaluating at Critical Software. We prioritize minimal, reliable, security-first,

prod-ready tools with support for local deployment. If you know better ones, feel free to reach out to one of the maintainers or open a pull request.

agno-agi/agno: a lightweight library for building multi-modal agentsArize-ai/phoenix: an open-source AI observability platform designed for experimentation, evaluation, and troubleshootingBerriAI/litellm: all LLM APIs using the OpenAI format [Bedrock, Huggingface, VertexAI, TogetherAI, Azure, OpenAI, Groq, &c.]browser-use/browser-use: make websites accessible for AI agentsCinnamon/kotaemon: an open-source RAG-based tool for chatting with your documentsComposioHQ/composio: equip’s your AI agents & LLMs with 100+ high-quality integrations via function callingdeepset-ai/haystack: orchestration framework to build customizable, production-ready LLM applicationsdottxt-ai/outlines: make LLMs speak the language of every applicationDS4SD/docling: get your documents ready for gen AIeth-sri/lmql: a programming language for LLMs based on a superset of Pythonexo-explore/exo: run your own AI cluster at home with everyday devices 📱💻 🖥️⌚FlowiseAI/Flowise: drag & drop UI to build your customized LLM flowgroq/groq-python: the official Python library for the Groq APIGiskard-AI/giskard: control risks of performance, bias and security issues in AI systemsguidance-ai/guidance: a guidance language for controlling large language modelsh2oai/h2o-llmstudio: a framework and no-code GUI for fine-tuning LLMshiyouga/LLaMA-Factory: unified efficient fine-tuning of 100+ LLMs and VLMsinstructor-ai/instructor: the most popular Python library for working with structured outputs from LLMsItzCrazyKns/Perplexica: an AI-powered search engine and open source alternative to Perplexity AIkeephq/keep: open-source AIOps and alert management platformkhoj-ai/khoj: a self-hostable AI second brainlangfuse/langfuse: an open source LLM engineering platform with support for LLM observability, metrics, evals, prompt management, playground, datasetslanggenius/dify: an open-source LLM app development platform, which combines agentic AI workflow, RAG pipeline, agent capabilities, model management, observability features and more, letting you quickly go from prototype to productionlatitude-dev/latitude-llm: open-source prompt engineering platform to build, evaluate, and refine your prompts with AImicrosoft/data-formulator: transform data and create rich visualizations iteratively with AI 🪄microsoft/prompty: an asset class and format for LLM prompts designed to enhance observability, understandability, and portability for developersmicrosoft/robustlearn: a unified library for research on robust MLMintplex-Labs/anything-llm: all-in-one Desktop & Docker AI application with built-in RAG, AI agents, No-code agent builder, and moreollama/ollama: get up and running with Llama 3.3, DeepSeek-R1, Phi-4, Gemma 2, and other large LMspromptfoo/promptfoo: a developer-friendly local tool for testing LLM applicationspydantic/pydantic-ai: agent framework / shim to use Pydantic with LLMsrun-llama/llama_index: the leading framework for building LLM-powered agents over your dataScrapeGraphAI/Scrapegraph-ai: a web scraping python library that uses LLM and direct graph logic to create scraping pipelines for websites and local documentsstanfordnlp/dspy: the framework for programming - not prompting - language modelstopoteretes/cognee: reliable LLM memory for AI applications and AI agentsunitaryai/detoxify: trained models and code to predict toxic commentsunslothai/unsloth: finetune Llama 3.3, DeepSeek-R1 and reasoning LLMs 2x faster with 70% less memory! 🦥

📺 Videos

- (ESSS, 2024) AI Revolution Transforming Safety-Critical Systems EXPLAINED! with Raghavendra Bhat

- (IVA, 2023) AI in Safety-Critical Systems

- (MathWorks, 2023) Understanding and Verifying Your AI Models with Lucas García

- (MathWorks, 2024a) Incorporating Machine Learning Models into Safety-Critical Systems with Lucas García

- Slides available here

- (NeurIPS, 2023) From Theory to Practice: Incorporating ML Models into Safety-Critical Systems // older version

- (MathWorks, 2024b) AI Verification & Validation: Trends, Applications, and Challenges

- (MathWorks, 2025) Engineering Resilient Industrial Systems: AI and Cybersecurity with Lucas García, Martin Becker and Rares Curatu

- (MathWorks & Collins Aerospace, 2025) Ensuring Machine Learning Generalization in Avionics Using Formal Methods with Arthur Clavière

- (Microsoft Developer, 2024) How Microsoft Approaches AI Red Teaming with Tori Westerhoff and Pete Bryan

- (MLOps Community, 2025) Robustness, Detectability, and Data Privacy in AI with Vinu Sadasivan and Demetrios Brinkmann

- (NeurIPS, 2024) AI Verification & Validation: Trends, Applications, and Challenges with Lucas García

- (RCLW02, 2025) Calibrating Data-Driven Predictions for Safety-Critical Systems by Carla Ferreira

- (SafeExplain, 2024) Explainable AI for systems with functional safety requirements

- (Simons Institute, 2022) Learning to Control Safety-Critical Systems with Adam Wierman

- (Stanford, 2022) Stanford Seminar - Challenges in AI Safety: A Perspective from an Autonomous Driving Company

- (Stanford, 2024) Best of - AI and safety critical systems

- (valgrAI, 2024) Integrating machine learning into safety-critical systems with Thomas Dietterich

📄 Whitepapers

- (Alan Turing Institute) Defence AI Assurance: Identifying Promising Practice and A System Card Template for Defence

- (Fraunhofer) Dependable AI: How to use Artificial Intelligence even in critical applications?

- (IET) The Application of Artificial Intelligence in Functional Safety

- (MathWorks) Verify an Airborne Deep Learning System

- (Thales) The Challenges of using AI in Critical Systems

👷🏼 Working Groups

- (CWE) Artificial Intelligence WG

- (EUROCAE) WG-114 / Artificial Intelligence

- (Linux Foundation) ONNX Safety-Related Profile

- (SCSS) Safety of AI / Autonomous Systems Working Group

👾 Miscellaneous

- AI Incident Database: dedicated to indexing the collective history of harms or near harms realized in the real world by the deployment of AI systems

- AI Safety: the hub for AI safety resources

- AI Safety Landscape: AI safety research agendas

- AI Safety Quest: designed to help new people more easily navigate the AI Safety ecosystem, connect with like-minded people and find projects that are a good fit for their skills

- AI Safety Support: a community-building project working to reduce the likelihood of existential risk from AI by providing resources, networking opportunities and support to early career, independent and transitioning researchers

- AI Safety Atlas: the central repository of AI Safety research, distilled into clear, interconnected and actionable knowledge

- AI Snake Oil: debunking hype about AI’s capabilities and transformative effects

- DARPA’s Assured Autonomy Tools Portal

- Avid: AI vulnerability database, an open-source, extensible knowledge base of AI failures

- Awful AI, a collection of scary AI use cases

- CO/AI: actionable resources & strategies for the AI era

- Data Cards Playbook: a toolkit for transparency in AI dataset documentation

- DHS AI: guidance on responsible adoption of GenAI in homeland security, including pilot programs insights, safety measures, and use cases

- ECSS’s Space engineering – Machine learning qualification handbook

- Google’s Responsible Generative AI Toolkit

- Hacker News on The Best Language for Safety-Critical Software

- MITRE ATLAS: navigate threats to AI systems through real-world insights

- ML Safety: the ML research community focused on reducing risks from AI systems

- MLSecOps by Protect AI

- OWASP’s Top 10 LLM Applications & Generative AI

- Paul Niquette’s Software Does Not Fail essay

- RobustML: community-run hub for learning about robust ML

- Safety Architectures for AI Systems: part of the Fraunhofer IKS services landing page

- SEBoK Verification and Validation of Systems in Which AI is a Key Element

- StackOverflow discussion on Python coding standards for Safety Critical applications

- The gospel of Trustworthy AI according to

🏁 Meta

- safety-critical-systems GitHub topic

- Awesome LLM Apps: a collection of awesome LLM apps with AI Agents and RAG using OpenAI, Anthropic, Gemini and opensource models

- Awesome Python Data Science: (probably) the best curated list of data science software in Python

- Awesome MLOps: a curated list of awesome MLOps tools

- Awesome Production ML: a curated list of awesome open source libraries that will help you deploy, monitor, version, scale, and secure your production machine learning

- Awesome Prompt Hacking: an awesome list of curated resources on prompt hacking and AI safety

- Awesome Trustworthy AI: list covering different topics in emerging research areas including but not limited to out-of-distribution generalization, adversarial examples, backdoor attack, model inversion attack, machine unlearning, &c.

- Awesome Responsible AI: a curated list of awesome academic research, books, code of ethics, courses, data sets, frameworks, institutes, maturity models, newsletters, principles, podcasts, reports, tools, regulations and standards related to Responsible, Trustworthy, and Human-Centered AI

- Awesome Safety Critical: a list of resources about programming practices for writing safety-critical software

- Common Weakness Enumeration: discover AI common weaknesses such as improper validation of generative AI output

- FDA Draft Guidance on AI: regulatory draft guidance from the US Food & Drug Association, which regulates the development and marketing of Medical Devices in the US (open for comments until April 7th 2025)

About Us

Critical Software is a Portuguese company that specializes in safety- and mission-critical software.

Our mission is to build a better and safer world by creating safe and reliable solutions for demanding industries like Space, Energy, Banking, Defence and Medical.

We get to work every day with a variety of high-profile companies, such as Airbus, Alstom, BMW, ESA, NASA, Siemens, and Thales.

We build AI systems that make a difference by applying AI where trust and impact matter the most.

Our AI solutions are designed to meet the highest standards of safety, reliability, and ethical responsibility.

If it’s true that “everything fails all the time”, the stuff we do has to fail less often… or not at all.

Are you ready to begin your Critical adventure? 🚀 Check out our open roles.

Contributions

📣 We’re actively looking for maintainers and contributors!

AI is a rapidly developing field and we are extremely open to contributions, whether it be in the form of issues, pull requests or discussions.

For detailed information on how to contribute, please read our guidelines.

Contributors

Citation

If you found this repository helpful, please consider citing it using the following:

@misc{Galego_Awesome_Safety-Critical_AI,

author = {Galego, João and Reis Nunes, Pedro and França, Fernando and Roque, Miguel and Almeida, Tiago and Garrido, Carlos},

title = {Awesome Safety-Critical AI},

url = {https://github.com/JGalego/awesome-safety-critical-ai}

}